Abstract

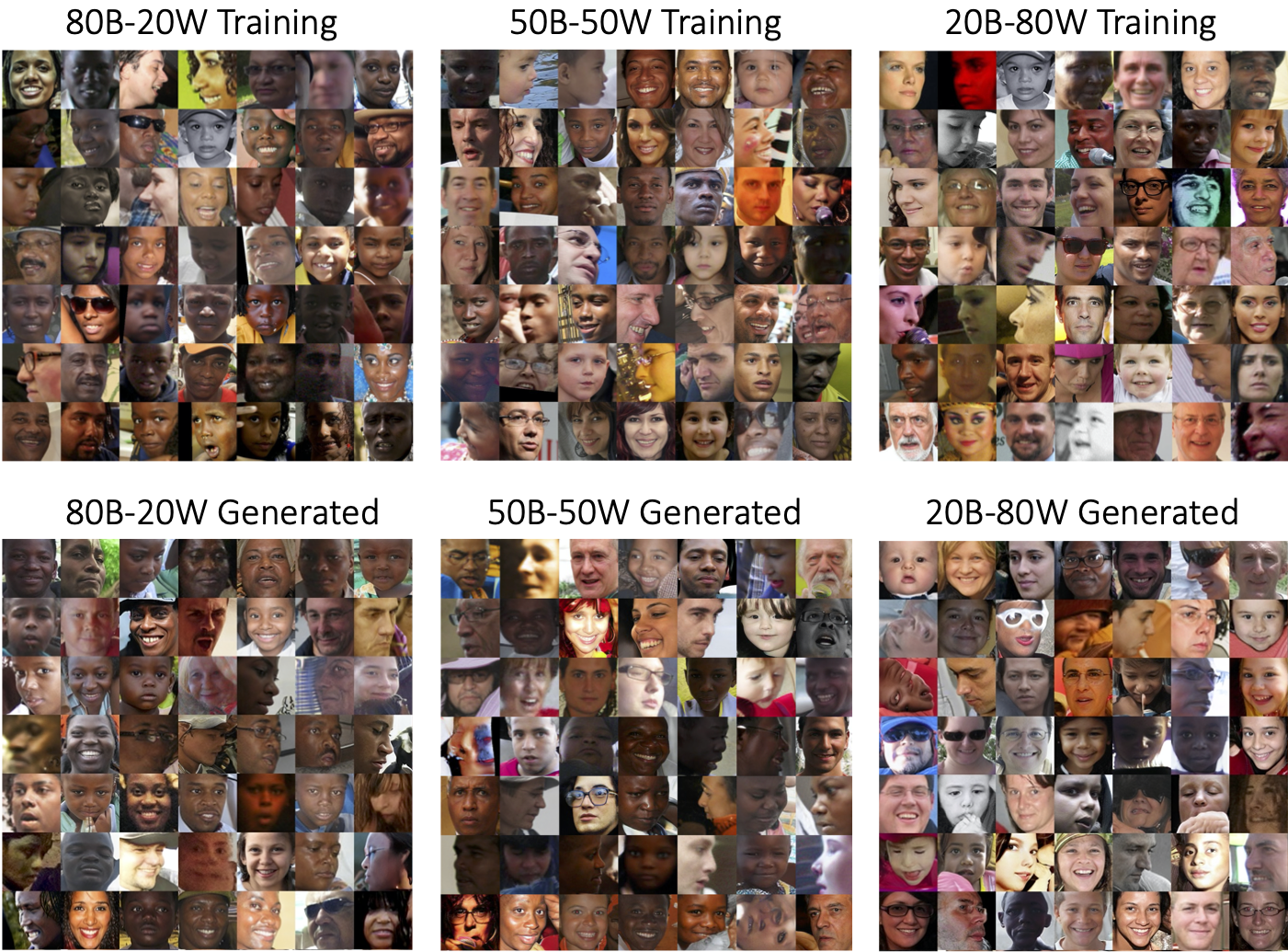

In this work, we study how the performance and evaluation of generative image models are impacted by the racial composition of their training datasets. By examining and controlling the racial distributions in various training datasets, we are able to observe the impacts of different training distributions on generated image quality and the racial distributions of the generated images. Our results show that the racial compositions of generated images successfully preserve that of the training data. However, we observe that truncation, a technique used to generate higher quality images during inference, exacerbates racial imbalances in the data. Lastly, when examining the relationship between image quality and race, we find that the highest perceived visual quality images of a given race come from a distribution where that race is well-represented, and that annotators consistently prefer generated images of white people over those of Black people.

Results

The below video shows 100 images sampled from an FFHQ-trained StyleGAN2 with a fixed random seed but changing level of truncation, starting with no truncation and ending with full truncation. We find that truncation exacerbates racial imbalances in the traning data.

Links

Citation

@inproceedings{maluleke2022studying,

author = {Maluleke, Vongani H and Thakkar, Neerja and Brooks, Tim and Weber, Ethan and Darrell, Trevor and Efros, Alexei A and Kanazawa, Angjoo and Guillory, Devin},

title = {Studying Bias in GANs through the Lens of Race},

booktitle = {European Conference on Computer Vision (ECCV)},

year = {2022}

}Acknowledgments

We thank Hany Farid, Judy Hoffman, Aaron Hertzmann, Bryan Russell, and Deborah Raji for useful discussions and feedback. This work was supported by the BAIR/BDD sponsors, ONR MURI N00014-21-1-2801, and NSF Graduate Fellowships. The study of annotator bias was performed under IRB Protocol ID 2022-04-15236.

Project webpage based on StyleGAN3.